The call came at 3 AM. Our AI agents were responding, but something was wrong – they were giving bizarre answers, burning through our token budget, and we had no idea why. That night taught me a painful lesson: traditional monitoring isn’t enough for AI systems. You need observability designed specifically for the unique challenges of artificial intelligence.

Observability Fundamentals: Why AI Agents Are Different

Why monitoring AI agents is unique hit me like a revelation that sleepless night. Traditional applications have predictable failure modes – they crash, timeout, or return errors. AI agents fail subtly. They confidently provide wrong answers, gradually drift from their intended behavior, or suddenly start consuming 10x more resources for no apparent reason.

The challenges I’ve encountered are unlike anything in traditional software:

- Non-deterministic behavior: Same input doesn’t always produce same output

- Quality degradation: Performance can deteriorate without obvious errors

- Context dependencies: Behavior changes based on conversation history

- Cost explosions: Token usage can spike without warning

- Hallucination detection: Agents can generate plausible but false information

Key metrics to track evolved through painful experience. Beyond traditional metrics like latency and error rates, I learned to monitor:

- Semantic drift: How far responses deviate from expected patterns

- Confidence distributions: Changes in agent certainty over time

- Token efficiency: Output quality per token consumed

- Conversation coherence: Logical consistency across interactions

- Prompt injection attempts: Security-specific behavioral anomalies

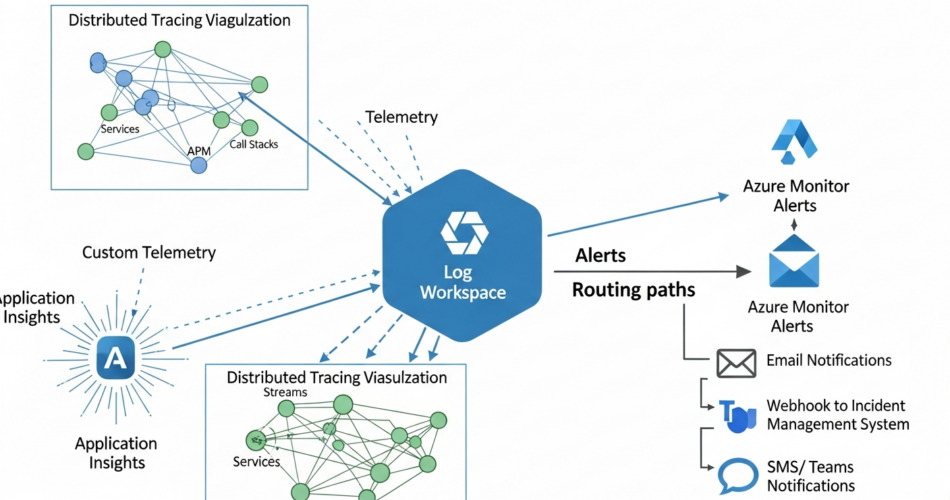

Azure Monitor integration became my foundation, but I had to extend it significantly for AI-specific needs. The standard metrics were a starting point, not the destination.

Implementation Guide: Building Comprehensive Observability

Setting up Application Insights for AI agents required a complete rethink of what to track. Here’s my evolved approach:

Core Telemetry Setup:

public class AIAgentTelemetry

{

private readonly TelemetryClient _telemetryClient;

public void TrackAgentInteraction(AgentRequest request, AgentResponse response)

{

var properties = new Dictionary<string, string>

{

["AgentId"] = request.AgentId,

["ConversationId"] = request.ConversationId,

["Prompt"] = SanitizeForLogging(request.Prompt),

["ResponseSummary"] = SummarizeResponse(response.Content),

["Model"] = request.ModelName,

["SystemPrompt"] = HashSystemPrompt(request.SystemPrompt)

};

var metrics = new Dictionary<string, double>

{

["TokensUsed"] = response.TokenCount,

["ResponseTime"] = response.Duration.TotalMilliseconds,

["Confidence"] = response.Confidence,

["Temperature"] = request.Temperature,

["EstimatedCost"] = CalculateCost(response.TokenCount, request.ModelName)

};

_telemetryClient.TrackEvent("AgentInteraction", properties, metrics);

// Track anomalies

if (response.Confidence < 0.5)

{

_telemetryClient.TrackTrace("LowConfidenceResponse", SeverityLevel.Warning);

}

}

}Custom telemetry implementation became crucial for AI-specific insights:

public class SemanticTelemetry

{

public void TrackSemanticDrift(string response, string expectedPattern)

{

var similarity = CalculateSemanticSimilarity(response, expectedPattern);

if (similarity < 0.7)

{

_telemetryClient.TrackMetric("SemanticDrift", 1 - similarity);

_telemetryClient.TrackEvent("SemanticAnomalyDetected",

new Dictionary<string, string>

{

["Response"] = response,

["ExpectedPattern"] = expectedPattern,

["Similarity"] = similarity.ToString()

});

}

}

public void TrackHallucinationRisk(AgentResponse response)

{

var hallucinationIndicators = new[]

{

response.ContainsUnverifiedClaims,

response.ConfidenceVariance > 0.3,

response.SourceCitations.Count == 0,

response.ContainsAbsoluteStatements

};

var riskScore = hallucinationIndicators.Count(i => i) / 4.0;

_telemetryClient.TrackMetric("HallucinationRiskScore", riskScore);

}

}Log Analytics workspace configuration taught me to structure data for AI analysis:

// Custom query for AI agent analysis

AIAgentLogs

| where TimeGenerated > ago(1h)

| summarize

AvgConfidence = avg(Confidence),

TokensPerMinute = sum(TokensUsed) / 60,

CostPerHour = sum(EstimatedCost) * 60,

AnomalyCount = countif(Confidence < 0.5)

by AgentId, bin(TimeGenerated, 1m)

| where AnomalyCount > 5 or CostPerHour > 100

| project TimeGenerated, AgentId, Issue = case(

AnomalyCount > 5, "High Anomaly Rate",

CostPerHour > 100, "Cost Spike",

"Multiple Issues"

)Code examples for instrumentation became templates I use everywhere:

class ObservableAIAgent:

def __init__(self, agent_id: str):

self.agent_id = agent_id

self.tracer = trace.get_tracer(__name__)

self.meter = metrics.get_meter(__name__)

# Create metrics

self.token_counter = self.meter.create_counter(

"ai_agent_tokens_used",

description="Total tokens consumed by agent"

)

self.confidence_histogram = self.meter.create_histogram(

"ai_agent_confidence",

description="Distribution of response confidence scores"

)

async def process_request(self, request: AgentRequest) -> AgentResponse:

with self.tracer.start_as_current_span("agent_interaction") as span:

span.set_attributes({

"agent.id": self.agent_id,

"request.model": request.model,

"request.conversation_id": request.conversation_id

})

try:

# Process request

response = await self._internal_process(request)

# Record metrics

self.token_counter.add(response.token_count, {

"agent_id": self.agent_id,

"model": request.model

})

self.confidence_histogram.record(response.confidence, {

"agent_id": self.agent_id

})

# Detect anomalies

self._check_anomalies(request, response)

return response

except Exception as e:

span.record_exception(e)

span.set_status(trace.Status(trace.StatusCode.ERROR))

raisePerformance Monitoring: Beyond Basic Metrics

Response time tracking for AI agents revealed patterns I hadn’t expected:

- First token latency (critical for user experience)

- Total generation time (varies with output length)

- Cache hit impact (dramatic improvements possible)

- Model warm-up effects (cold starts can add seconds)

Token usage analytics became my cost control center:

class TokenAnalytics:

def analyze_token_efficiency(self, interactions: List[AgentInteraction]):

results = {

"total_tokens": sum(i.token_count for i in interactions),

"avg_tokens_per_request": statistics.mean(i.token_count for i in interactions),

"token_waste_ratio": self._calculate_waste_ratio(interactions),

"cost_per_successful_interaction": self._calculate_success_cost(interactions),

"expensive_patterns": self._identify_expensive_patterns(interactions)

}

# Alert on concerning patterns

if results["token_waste_ratio"] > 0.3:

self.alert("High token waste detected", results)

return results

def _calculate_waste_ratio(self, interactions):

failed_tokens = sum(i.token_count for i in interactions if not i.successful)

total_tokens = sum(i.token_count for i in interactions)

return failed_tokens / total_tokens if total_tokens > 0 else 0Error rate monitoring for AI is nuanced – not all errors are equal:

error_categories:

critical:

- hallucination_detected

- prompt_injection_attempt

- data_leak_risk

operational:

- token_limit_exceeded

- timeout

- model_unavailable

quality:

- low_confidence_response

- semantic_drift

- context_confusion

user_experience:

- slow_first_token

- incomplete_response

- formatting_errorCapacity planning metrics taught me to think differently about scale:

- Token consumption growth rate

- Peak conversation complexity

- Context window utilization

- Concurrent conversation limits

- Cache effectiveness at scale

Alerting and Response: Catching Problems Early

Alert rule configuration evolved through incidents:

{

"alert_rules": [

{

"name": "Semantic Drift Detection",

"condition": "avg(semantic_similarity) < 0.7 for 5 minutes",

"severity": "warning",

"action": "notify_team"

},

{

"name": "Cost Spike Alert",

"condition": "token_rate > 10000/minute OR hourly_cost > $50",

"severity": "critical",

"action": "throttle_and_alert"

},

{

"name": "Hallucination Risk",

"condition": "hallucination_score > 0.8 on 3 consecutive responses",

"severity": "critical",

"action": "disable_agent_and_escalate"

},

{

"name": "Conversation Coherence Loss",

"condition": "context_confusion_rate > 0.2",

"severity": "warning",

"action": "increase_logging_detail"

}

]

}Automated response workflows saved my sanity:

class AutomatedResponseHandler:

async def handle_alert(self, alert: Alert):

if alert.type == "cost_spike":

# Immediate throttling

await self.throttle_agent(alert.agent_id, reduction=0.5)

# Switch to cheaper model

await self.downgrade_model(alert.agent_id)

# Notify finance team

await self.notify_cost_alert(alert)

elif alert.type == "semantic_drift":

# Increase monitoring

await self.enable_detailed_logging(alert.agent_id)

# Rollback to previous prompt version

await self.rollback_prompt(alert.agent_id)

# Schedule manual review

await self.create_review_task(alert)

elif alert.type == "security_threat":

# Immediate isolation

await self.isolate_agent(alert.agent_id)

# Preserve evidence

await self.snapshot_conversation_state(alert)

# Escalate to security team

await self.security_escalation(alert)Escalation procedures became crucial for AI incidents:

- Level 1: Automated mitigation (throttling, model switching)

- Level 2: On-call engineer intervention (prompt adjustment, cache clearing)

- Level 3: AI team escalation (model behavior investigation)

- Level 4: Executive notification (major cost overruns, security breaches)

Dashboard creation focused on actionable insights:

Real-Time Operations Dashboard:

- Active conversations and their states

- Token burn rate with cost projection

- Response time percentiles (p50, p95, p99)

- Error rate by category

- Confidence score distribution

AI Health Dashboard:

- Semantic drift trends

- Hallucination risk scores

- Prompt injection attempts

- Model performance comparison

- Cache hit rates

Cost Management Dashboard:

- Real-time spend vs. budget

- Cost per conversation

- Token efficiency trends

- Model cost comparison

- Department/user attribution

Real-World Monitoring Patterns

Patterns emerged from production experience:

The Gradual Degradation Pattern:

Agents slowly drift from intended behavior. Solution: Baseline establishment and continuous comparison.

The Context Explosion Pattern:

Conversations grow unbounded, consuming massive tokens. Solution: Context window monitoring and automatic summarization triggers.

The Confidence Cliff Pattern:

Agent confidence suddenly drops across all responses. Solution: Model health checks and automatic fallback.

The Cost Spiral Pattern:

Token usage grows exponentially with conversation length. Solution: Progressive token budgets and conversation limits.

Lessons from Production Incidents

The Great Hallucination Event: An agent started confidently stating our company was founded in 1823 (we were founded in 2019). Lesson: Monitor for factual consistency.

The Infinite Loop Incident: Two agents got stuck asking each other for clarification. Cost: $2,000 in 30 minutes. Lesson: Circuit breakers for agent interactions.

The Context Confusion Crisis: Agent started mixing up conversations, giving user A information about user B. Lesson: Conversation isolation monitoring.

The Prompt Injection Attack: Clever user convinced agent to ignore its instructions. Lesson: Behavioral anomaly detection.

Advanced Observability Techniques

Techniques I’ve developed for deep insights:

Semantic Fingerprinting: Create unique signatures for expected response patterns:

def semantic_fingerprint(response: str) -> str:

# Extract key semantic elements

entities = extract_entities(response)

sentiment = analyze_sentiment(response)

structure = analyze_structure(response)

return hashlib.sha256(

f"{entities}:{sentiment}:{structure}".encode()

).hexdigest()[:16]Conversation Flow Analysis: Track how conversations evolve:

class ConversationFlowAnalyzer:

def analyze_flow(self, conversation: List[Turn]):

transitions = self._extract_transitions(conversation)

coherence_score = self._calculate_coherence(transitions)

drift_score = self._calculate_drift(conversation)

return {

"coherence": coherence_score,

"drift": drift_score,

"unusual_patterns": self._detect_unusual_patterns(transitions),

"estimated_quality": coherence_score * (1 - drift_score)

}The Future of AI Observability

As I look ahead, I see exciting developments:

- Self-monitoring agents that detect their own anomalies

- Predictive monitoring that anticipates problems

- Automated prompt optimization based on observability data

- Cross-agent behavioral analysis

- Real-time hallucination detection and correction

Final Reflections: Observability as a Discipline

Building observability for Azure AI Agents taught me that monitoring AI isn’t just about watching metrics – it’s about understanding behavior. Every anomaly tells a story, every drift reveals a pattern, and every incident teaches a lesson.

The 3 AM call that started this journey? We traced it to a subtle prompt change that seemed harmless but fundamentally altered agent behavior. Now, with comprehensive observability, we catch these issues in minutes, not hours.

For those building production AI systems, remember: your agents are only as reliable as your ability to observe them. Invest in observability early, instrument comprehensively, and never assume AI will behave predictably. The goal isn’t to prevent all problems – it’s to detect and respond to them before they impact users.

As I close this reflection, I’m grateful for every incident, every anomaly, and every late-night debugging session. They’ve taught me that in the world of AI, observability isn’t optional – it’s the difference between hoping your agents work and knowing they do.