Looking at my first AI agent architecture diagram from two years ago makes me cringe. It was monolithic, tightly coupled, and completely unprepared for the rapid evolution we’ve witnessed. That painful realization taught me that in the world of AI, if you’re not building for change, you’re building for obsolescence.

Current Landscape Analysis: Where We Stand Today

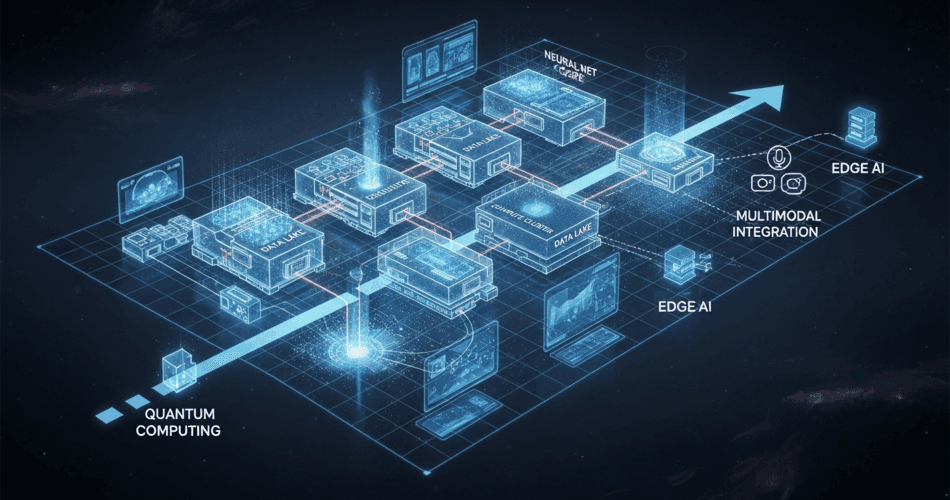

AI agent technology trends are moving at breakneck speed. What seemed cutting-edge six months ago feels dated today. I’ve watched GPT-3.5 give way to GPT-4, seen the rise of specialized models, and witnessed the emergence of multimodal capabilities that seemed like science fiction just recently.

Azure roadmap insights have become my crystal ball. Microsoft’s aggressive push into AI isn’t slowing down – if anything, it’s accelerating. Recent announcements about agent orchestration, enhanced security models, and edge deployment capabilities tell me we’re just scratching the surface.

Industry adoption patterns reveal fascinating trends:

- Enterprises moving from experimentation to production at scale

- Shift from single agents to complex multi-agent systems

- Growing demand for domain-specific capabilities

- Increasing focus on cost optimization and efficiency

- Rising importance of explainability and governance

What strikes me most is how quickly the ‘experimental’ becomes ‘essential.’ Features I treated as nice-to-haves last year are now fundamental requirements.

Scalable Architecture Design: Building for Growth

Scalable Architecture Design: Building for Growth

Microservices approach for agents transformed how I think about AI systems. Instead of monolithic agents trying to do everything, I now build specialized microagents:

Agent Architecture:

Core Services:

- Language Understanding Service

- Context Management Service

- Decision Engine Service

- Response Generation Service

- Memory Service

Supporting Services:

- Authentication Gateway

- Rate Limiting Service

- Monitoring Collector

- Cost Management Service

- Model Router Service

Benefits:

- Independent scaling

- Isolated failures

- Technology flexibility

- Easier testing

- Gradual upgradesEvent-driven architectures became my secret weapon for flexibility:

class EventDrivenAgent:

def __init__(self):

self.event_bus = EventBus()

self.handlers = {}

# Register handlers

self.event_bus.subscribe('query.received', self.process_query)

self.event_bus.subscribe('context.updated', self.refresh_context)

self.event_bus.subscribe('model.changed', self.adapt_behavior)

async def process_event(self, event: AgentEvent):

# Decoupled processing

event_type = event.type

if event_type in self.handlers:

await self.handlers[event_type](event)

else:

await self.handle_unknown_event(event)

def add_capability(self, event_type: str, handler: Callable):

# Dynamic capability addition

self.handlers[event_type] = handler

self.event_bus.subscribe(event_type, handler)This approach lets me add new capabilities without touching existing code – crucial for rapid evolution.

Container orchestration with AKS (Azure Kubernetes Service) solved my deployment nightmares:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ai-agent-ensemble

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

template:

spec:

containers:

- name: language-agent

image: myregistry.azurecr.io/language-agent:v2.1

resources:

requests:

memory: "2Gi"

cpu: "1"

limits:

memory: "4Gi"

cpu: "2"

env:

- name: MODEL_VERSION

value: "gpt-4-turbo"

- name: ENABLE_CACHE

value: "true"

- name: context-manager

image: myregistry.azurecr.io/context-manager:v1.8

# Sidecar pattern for shared contextCode examples for scalable designs taught me patterns that work:

The Circuit Breaker Pattern:

class AIAgentCircuitBreaker:

def __init__(self, failure_threshold=5, timeout=60):

self.failure_count = 0

self.failure_threshold = failure_threshold

self.timeout = timeout

self.last_failure_time = None

self.state = 'CLOSED' # CLOSED, OPEN, HALF_OPEN

async def call_agent(self, request):

if self.state == 'OPEN':

if time.time() - self.last_failure_time > self.timeout:

self.state = 'HALF_OPEN'

else:

return self.fallback_response(request)

try:

response = await self.agent.process(request)

if self.state == 'HALF_OPEN':

self.state = 'CLOSED'

self.failure_count = 0

return response

except Exception as e:

self.failure_count += 1

self.last_failure_time = time.time()

if self.failure_count >= self.failure_threshold:

self.state = 'OPEN'

return self.fallback_response(request)Evolution Strategies: Embracing Change

Version management for agents became as critical as version management for code:

class VersionedAgent:

def __init__(self):

self.versions = {

'v1.0': AgentV1(),

'v1.1': AgentV11(),

'v2.0': AgentV2()

}

self.routing_rules = {

'production': 'v1.1',

'beta': 'v2.0',

'legacy': 'v1.0'

}

async def route_request(self, request, context):

# Intelligent routing based on context

if context.user_segment == 'beta_testers':

version = 'beta'

elif context.requires_legacy:

version = 'legacy'

else:

version = 'production'

agent = self.versions[self.routing_rules[version]]

return await agent.process(request)A/B testing infrastructure lets me evolve with confidence:

class ABTestingFramework:

def __init__(self):

self.experiments = {}

self.metrics_collector = MetricsCollector()

def create_experiment(self, name: str, variants: List[AgentVariant]):

self.experiments[name] = {

'variants': variants,

'traffic_split': self._calculate_traffic_split(variants),

'metrics': defaultdict(list)

}

async def process_with_experiment(self, request, experiment_name):

experiment = self.experiments[experiment_name]

variant = self._select_variant(experiment['traffic_split'])

start_time = time.time()

response = await variant.agent.process(request)

duration = time.time() - start_time

# Collect metrics

self.metrics_collector.record({

'experiment': experiment_name,

'variant': variant.name,

'duration': duration,

'success': response.success,

'user_satisfaction': await self._get_satisfaction(response)

})

return responseGradual rollout techniques saved me from catastrophic failures:

class GradualRollout:

def __init__(self, new_version, old_version):

self.new_version = new_version

self.old_version = old_version

self.rollout_percentage = 0

self.health_monitor = HealthMonitor()

async def process(self, request):

if random.random() * 100 < self.rollout_percentage:

# Use new version

response = await self.new_version.process(request)

health = self.health_monitor.check(response)

if health.is_degraded:

# Automatic rollback

self.rollout_percentage = max(0, self.rollout_percentage - 10)

await self.alert_team("Health degradation detected")

return response

else:

# Use old version

return await self.old_version.process(request)

def increase_rollout(self, percentage):

self.rollout_percentage = min(100, self.rollout_percentage + percentage)Backward compatibility became non-negotiable:

class BackwardCompatibleAgent:

def __init__(self):

self.api_versions = {

'v1': self.handle_v1_request,

'v2': self.handle_v2_request,

'v3': self.handle_v3_request

}

async def process(self, request):

api_version = request.headers.get('api-version', 'v1')

if api_version in self.api_versions:

# Handle with appropriate version

response = await self.api_versions[api_version](request)

else:

# Attempt to handle with latest, with compatibility layer

response = await self.handle_with_compatibility(request, api_version)

return self.format_response(response, api_version)Future Technologies Integration: Preparing for What’s Next

Future Technologies Integration: Preparing for What’s Next

Preparing for new LLM models requires abstraction:

class ModelAgnosticAgent:

def __init__(self):

self.model_adapters = {

'gpt-4': GPT4Adapter(),

'gpt-5': GPT5Adapter(), # Future-proofing

'claude-3': ClaudeAdapter(),

'gemini-ultra': GeminiAdapter(),

'custom-model': CustomModelAdapter()

}

async def process(self, request, model_preference=None):

# Dynamic model selection

model = self.select_optimal_model(request, model_preference)

adapter = self.model_adapters[model]

# Unified interface regardless of model

return await adapter.generate(request)Multi-modal agent capabilities are already changing everything:

class MultiModalAgent:

def __init__(self):

self.modality_processors = {

'text': TextProcessor(),

'image': ImageProcessor(),

'audio': AudioProcessor(),

'video': VideoProcessor(),

'code': CodeProcessor()

}

async def process_multimodal(self, inputs: List[ModalInput]):

# Process each modality

processed_inputs = []

for input in inputs:

processor = self.modality_processors[input.modality]

processed = await processor.process(input.data)

processed_inputs.append(processed)

# Unified reasoning across modalities

return await self.unified_reasoning(processed_inputs)Edge deployment considerations keep me thinking about distributed AI:

class EdgeAwareAgent:

def __init__(self):

self.edge_models = {}

self.cloud_endpoint = "https://api.azure.com/ai"

async def process(self, request, location='cloud'):

if location == 'edge':

# Use lightweight edge model

if request.complexity > self.edge_threshold:

# Offload to cloud

return await self.cloud_process(request)

else:

return await self.edge_process(request)

else:

# Standard cloud processing

return await self.cloud_process(request)

def deploy_to_edge(self, device_capability):

# Adaptive model selection for edge

if device_capability.memory < 2GB:

return self.tiny_model

elif device_capability.memory < 8GB:

return self.small_model

else:

return self.standard_modelQuantum-ready architectures might seem far-fetched, but I’m preparing:

class QuantumReadyArchitecture:

def __init__(self):

self.classical_processor = ClassicalAIProcessor()

self.quantum_interface = QuantumInterface()

async def process(self, request):

if self.is_quantum_suitable(request):

# Problems suited for quantum advantage

quantum_result = await self.quantum_interface.process(

self.encode_for_quantum(request)

)

return self.decode_quantum_result(quantum_result)

else:

# Standard processing

return await self.classical_processor.process(request)

def is_quantum_suitable(self, request):

# Identify problems with potential quantum advantage

return request.problem_type in [

'optimization',

'cryptography',

'simulation',

'large_scale_search'

]Patterns for Long-Term Success

Patterns I’ve identified for sustainable AI architecture:

The Adapter Pattern: Abstract away external dependencies

The Strategy Pattern: Swap algorithms without changing structure

The Observer Pattern: React to changes in the AI landscape

The Facade Pattern: Simplify complex multi-agent interactions

The Proxy Pattern: Add capabilities without modifying agents

Real-World Evolution Stories

Let me share how these strategies played out in practice:

The Great Model Migration: When GPT-4 launched, our abstraction layer let us migrate 50+ agents in 2 days instead of 2 months.

The Multimodal Pivot: When image understanding became crucial, our modular architecture let us add vision capabilities without touching existing code.

The Edge Expansion: When latency requirements pushed us to edge deployment, our distributed architecture adapted seamlessly.

The Compliance Crisis: When new regulations hit, our versioning system let us maintain compliant versions while developing updates.

Investment Areas for Future-Proofing

Based on my experience, invest in:

- Abstraction Layers: Between your code and external services

- Monitoring Infrastructure: You can’t improve what you can’t measure

- Testing Frameworks: Especially for AI behavior validation

- Documentation Systems: Future you will thank present you

- Team Education: The best architecture means nothing without skilled people

The Philosophy of Future-Proofing

Future-proofing isn’t about predicting the future – it’s about building systems that can adapt to whatever comes. Every architectural decision I make now asks three questions:

- How hard will this be to change?

- What assumptions am I making?

- Where might the technology go?

Looking Ahead: My Predictions and Preparations

As I look toward the horizon, I see:

- AI agents becoming as commonplace as web servers

- Multi-agent systems solving problems we can’t imagine today

- Edge AI making centralized processing feel antiquated

- Quantum-AI hybrid systems tackling previously impossible problems

But more importantly, I see the need for architectures that can evolve into whatever the future demands.

Final Reflections: Building for the Unknown

Future-proofing your Azure AI Agent architecture isn’t about having all the answers – it’s about building systems that can find new answers as questions change. Every pattern I’ve shared, every code example I’ve provided, is really about one thing: embracing change as a constant.

The architecture diagrams I draw today will look as quaint in two years as my old ones do now. And that’s okay. Because if I’ve built them right, they’ll evolve into something better.

For those architecting AI systems today, my advice is simple: Build for change, not for permanence. Make your peace with obsolescence, but fight it with flexibility. And always remember – in the world of AI, the only constant is acceleration.

As I close this reflection on future-proofing, I’m filled with excitement about what’s coming. The architectures we build today are the foundations of tomorrow’s innovations. Make them strong, make them flexible, and most importantly, make them ready for a future none of us can fully predict.